Project update 9 of 17

Neural Networks - Part I

Hello everyone, Thank you for signing up and backing this project!

Note: This update comes to you courtesy of our intern Sandipan Chatterjee who put together a couple of projects to demonstrate the advanced functionality you can achieve on the SoM.

Neural Networks!

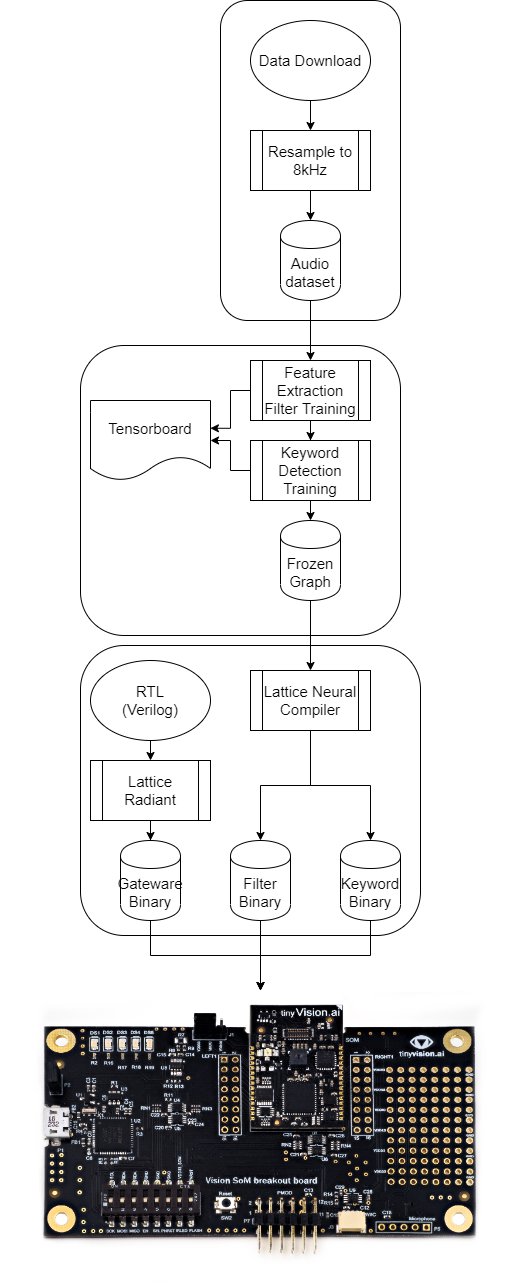

OK, so we have some hardware that can do object detection and keyword spotting. Great! The sample code works. In order to make this really useful and be able to detect cats/dogs and your favorite sounds, you need the infrastructure to train your own models.

Background on Neural Networks

We will implement a class of Neural Networks called Convolutional Neural Networks (CNN for short) on the Vision SoM. Other classes of networks are possible to implement, since this is a piece of uncommitted hardware, and will be part of the roadmap of this device.

Training

We will follow a two step process to create and use a CNN. The first step, unimaginatively called "training" followed by deployment of the trained models on devices where they do "inference". The weights between neurons are modified during training but kept constant during inference.

Neural networks are trained by presenting the network with an image or other data and comparing the output with the ground truth. An error signal is generated that is used to tweak the weights in the network. Assuming you set the various learning rate and other network parameters correctly and have good quality annotated data, the weights will converge. Over the course of training, this convergence indicates that the network has learned about the inputs presented to it.

You will need a software framework (usually Python based) as well as powerful GPU’s to do the training. This sort of compute is normally out of reach to most developers. However, Google has created a framework called Colab where you can run your training on bleeding edge hardware at no cost!

We took the Lattice SensAI example code and ported it to the Google Colab framework so that you can easily try out the training for yourself and experience the excitement of being able to go end-end with the process. This code is now released on GitHub.

In the next update, we’ll look at a few examples of what the SoM can do!

Help define the project’s future!

The project is not yet buttoned up! A lot of work remains in terms of software, documentation, marketing, training framework, hardware verification and so on. We invite you to collaborate using the Crowd Supply Discord channel and/or the tinyVision Discord channel.

Acknowledgements

Both the Object and Audio detection projects needed a bit of digging through code. This is especially difficult because understanding other people’s code without much documentation can be painful. A shout out to the Lattice team for their continued assistance throughout our journey!