tinyVision.ai

Video & Cameras

FPGA Boards

tinyVision.ai

Video & Cameras

FPGA Boards

Embedded Computer Vision is a highly multi-disciplinary field that requires expertise in optics, image sensors, hardware, firmware and so on. As a result, the bar to playing with technology in this field is quite high.

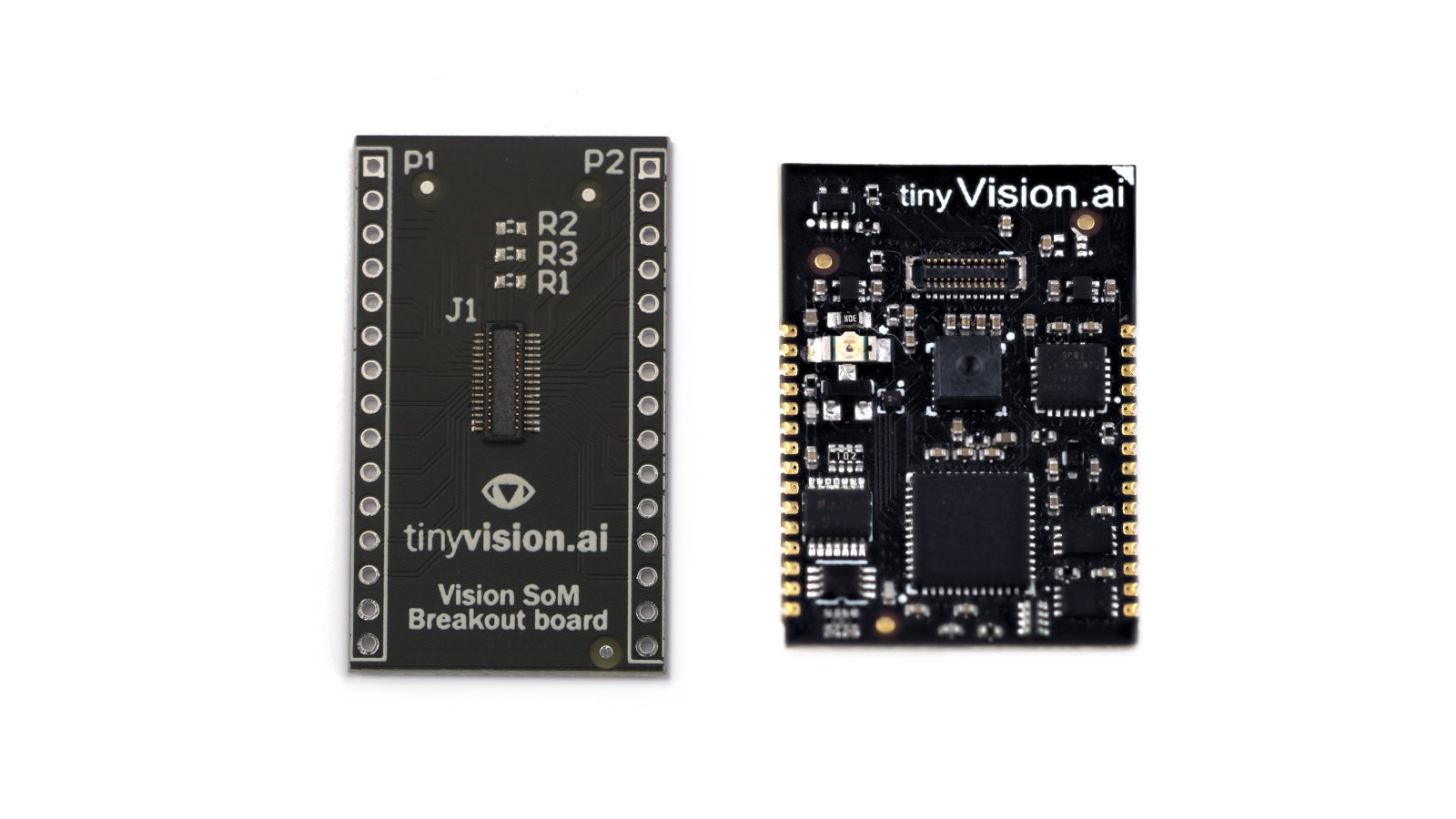

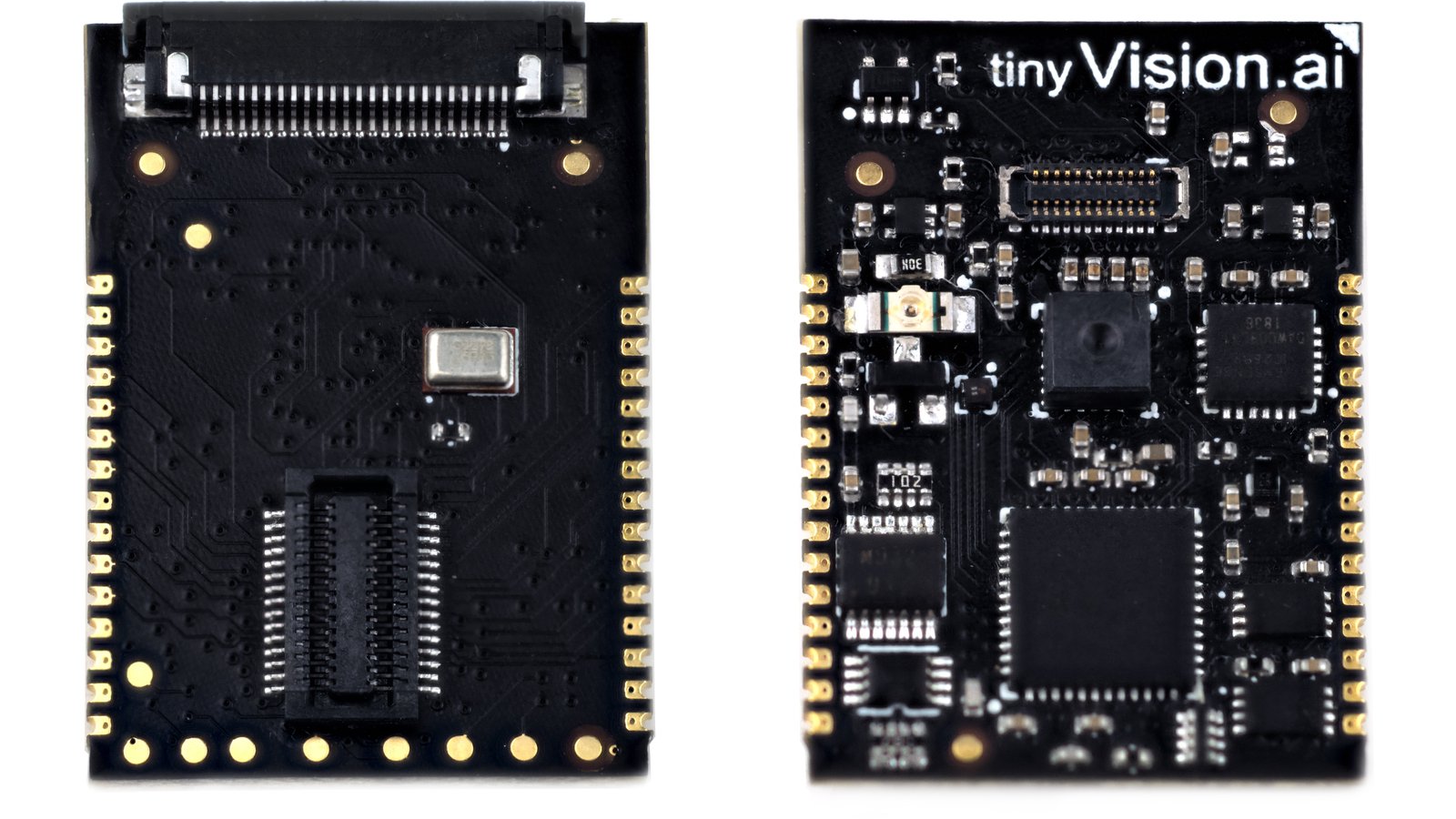

The Vision FPGA SoM is a Lattice FPGA based System on Module that integrates an ultra-low-power vision sensor, a 3-axis accelerometer/gyroscope, and an I²S MEMS microphone in a small form-factor (3 cm x 2 cm).

This device is built for developers who want to not only play with this technology, but also have a path to integrating it into real world products. We designed the device with the following thoughts in mind:

The modular concept is summarized in a talk given at a tinyML meetup in 2019.

By including most commonly used sensors in a configurable platform utilizing an FPGA with an open source toolchain, this device enables developers to experiment and build quickly. Sample FPGA, as well as host code will be provided as a jumping off point for backers.

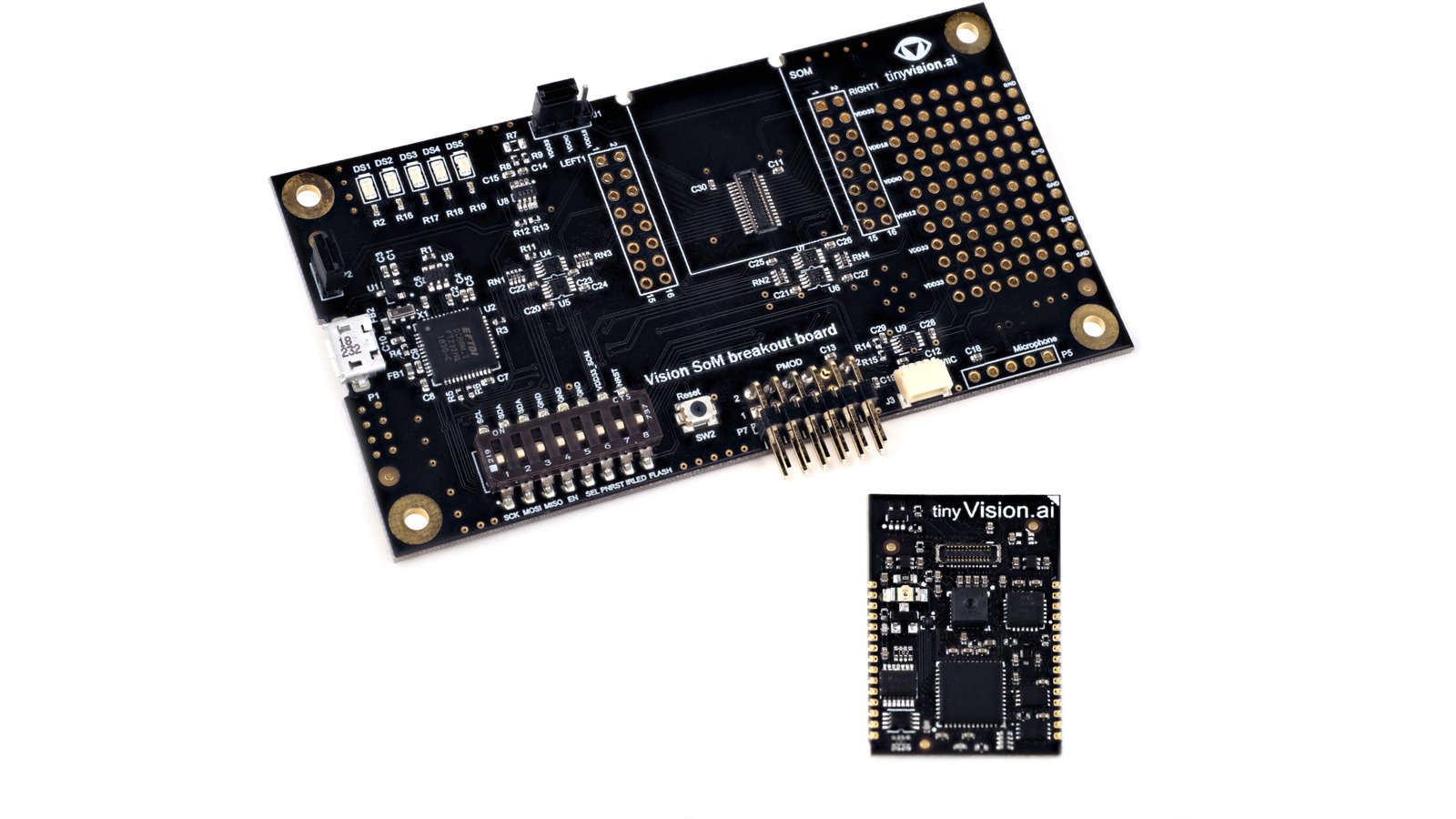

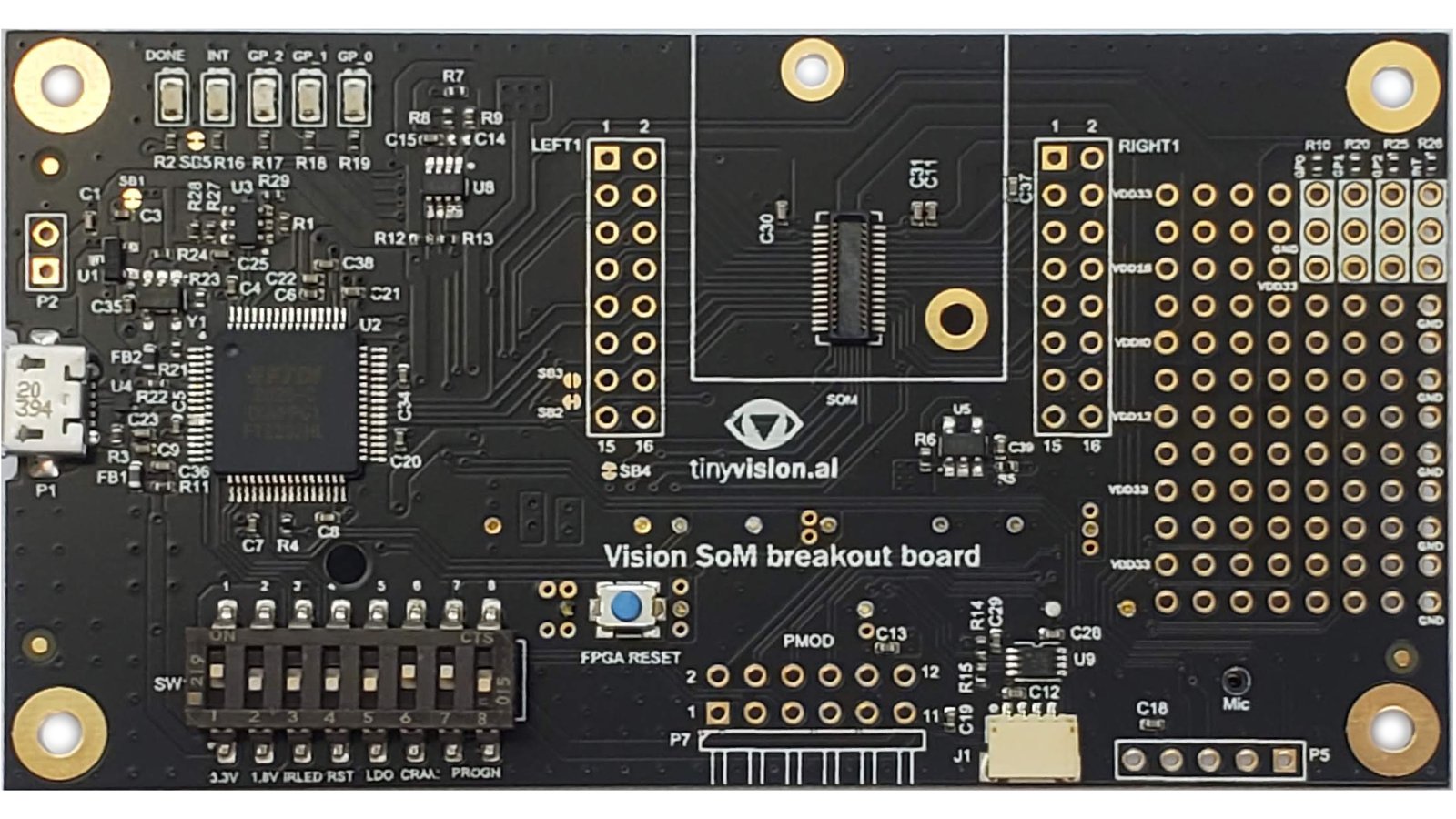

The Developer Board provides the following functionality that enables application development using the module:

The Developer board also has a Raspberry Pi Hat connector. This leverages the extensive RPi ecosystem to develop applications using the Vision FPGA SoM. This enables new use cases for a Raspberry Pi Zero W (including the ones as shown in Adafruit Pi Hat:

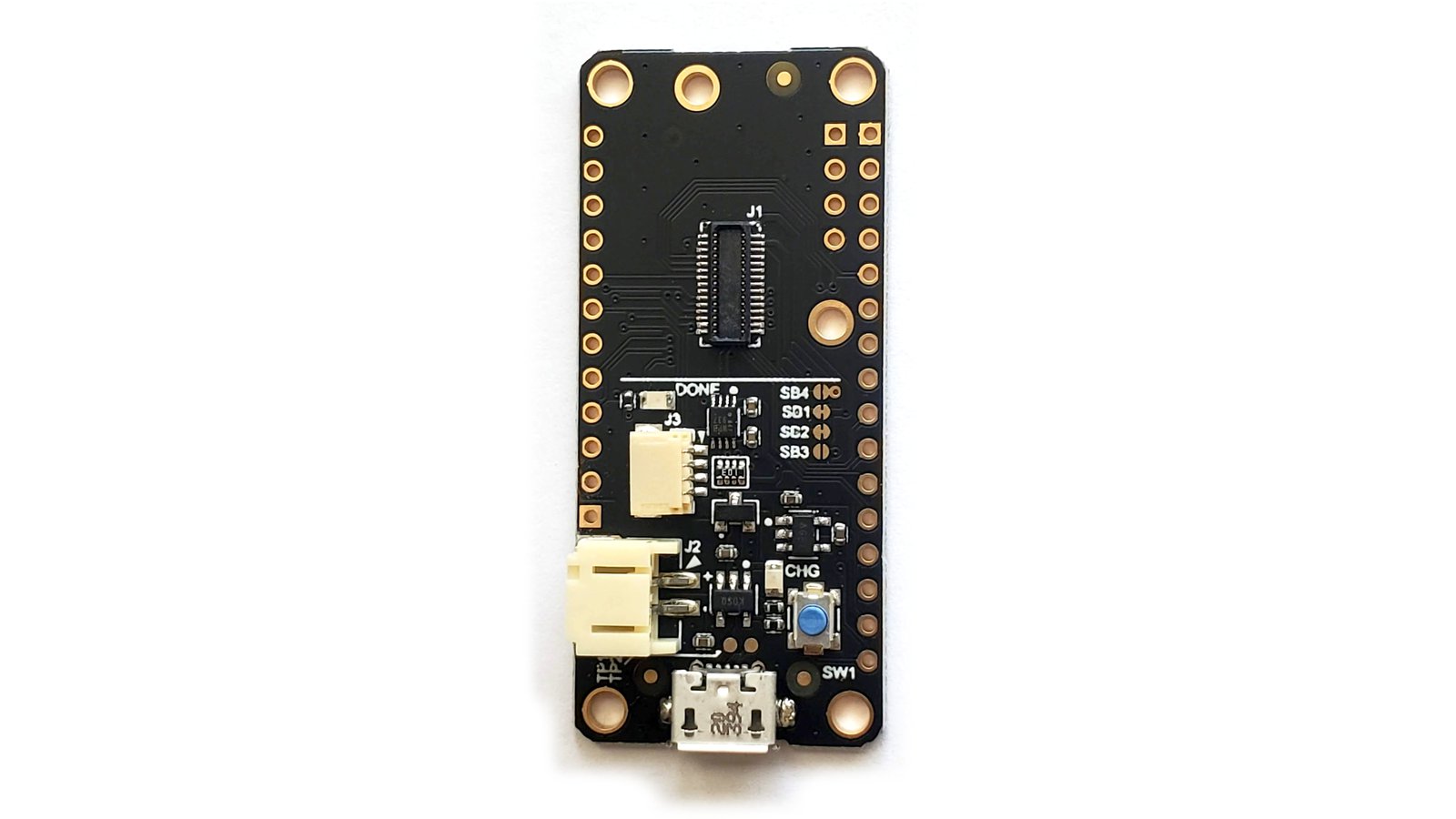

The breakout board is in the Adafruit Feather form factor and has the following features:

Note: the FPGA programming must be done using an external SPI master. The USB port is purely to supply power.

| Item | FPGA Vision SoM | iCEBreaker | TinyFPGA BX | Tomu FPGA | |

|---|---|---|---|---|---|

| OSHW? | OSHW | OSHW | OSHW | OSHW | Closed |

| Price | $100 | $69 | $38 | $45 | $49.49 |

| Schematics Published? | Yes | Yes | Yes | Not yet | Yes |

| Design Files Published? | Not Yet | Yes | Yes | Not yet | No |

| Volume Production Friendly? | Yes | No | No | No | No |

| FPGA | |||||

| FPGA Model | iCE40UP5K | iCE40UP5K | iCE40LP8K | iCE40UP5K | iCE40HX1K |

| Logic Capacity (LUTs) | 5280 | 5280 | 7680 | 5280 | 1280 |

| Internal RAM (bits) | 120k + 1024k | 120k + 1024k | 128k | 120k + 1024k | 64k |

| Multipliers | 8 | 8 | 0 | 8 | 0 |

| Peripherals | |||||

| USB Interface | FTDI 2232HQ (dev kit) | FTDI 2232HQ | On FPGA bootloader | On FPGA bootloader | FTDI 2232HL |

| User IOs | 8 | 27 + 7 | 41 + 2 | 4 + 2 | 18 |

| Pmod Connectors | 1 (dev board) | 3 | 0 | 0 | 1 |

| User Buttons | 0 | 1 tactile + 3 tactile on breakoff Pmod | 1 reset | 2 capacitive | 0 |

| User LED | 1 tricolor, high-power IR LED | 2 on-board | 4 on dev board | 2 + 5 on breakoff Pmod | 5 |

| Onboard Clock | 12 MHz, shared with FTDI | 12 MHz, shared with FTDI | 16 MHz | 12 MHz | 12 MHz, shared with FTDI |

| Flash | 8 Mb QSPI | 128 Mbit QSPI DDR | 8 Mbit SPI | 16 Mbit SPI | 32 Mbit SPI |

| SRAM | 64 Mbit QSPI PSRAM | No | No | No | No |

| IMU | InvenSense 6 DOF (accellerometer + gyroscope) | No | No | No | No |

| Mic | MEMS I²S microphone | No | No | No | No |

| Power Measurement | On dev kit | No | No | No | No |

| Open Source Toolchain | Yes | Yes | Yes | Yes | Yes |

| APIO | Yes | Yes | Yes | Yes | Yes |

| Icestudio | Yes | Yes | Yes | Not yet | Yes |

| Migen | No | Yes | Yes | No | Yes |

All data about the SoM is captured in the Vision FPGA SoM GitHub repo and will be updated over time with sample code, Colab notebooks, etc.

Produced by tinyVision.ai in San Diego, CA.

Sold and shipped by Crowd Supply.

A FPGA Vision SoM to play with!

One Vision FPGA SoM and one Breakout Board.

A Vision FPGA SoM and Developer Board.

Two FPGA Vision SoM's.

Pack of 10 FPGA Vision SoM's, at a 5% discount.

The SoM breakout board brings all SoM pins to 2.54 mm pitch pins for breadboarding, can't do without it! SoMe assembly required to solder the 2.54 mm headers on. Note: SoM is not included.

One developer board that breaks out SoM IO with in-line LEDs, includes a Raspberry Pi HAT connector, allows for USB power (with power monitoring) and programming, includes PMOD and QWIIC connectors, and has room for prototyping. Note: SoM is NOT included.

tinyVision.ai works closely with clients to incorporate low power CV into their devices. We are enabling CV with hardware in the form of tightly integrated CV modules with a clean API.