Project update 6 of 15

Machine Learning (ML)-Driven RF Signal Detection

by Andrey Bakhmat, Timur DavydovOver the last five-ten years, we’ve witnessed a fascinating trend where neural networks are taking over traditional methods of solving complex problems. Image recognition and machine translation are two good examples, demonstrating how deep neural networks outperform classic approaches that have been around for decades.

Now, the big question is: will this revolution extend to wireless technologies as well? It’s not a straightforward matter, and there’s no simple answer. For instance, in the RF analysis scene, despite the existence of a vast mathematical framework and impressive results, some rightly consider this area to be a wicked problem. So how can we effectively use deep learning when even defining the problem can be challenging?

When facing such complexity, we need effective tools. So, we’ve opted to start with a relatively simple problem of modulation recognition to assess the tools and how they work within the WSDR platform.

We will attempt to recognize different types of modulation by converting the sample stream into a stream of normalized vector IQ diagrams. The neural network will then process these diagrams as if they were images being used as input for recognition.

Is this approach naive? Certainly! However, as we will see later, surprisingly, it works. Moreover, it provides a solid foundation for future improvements and even a complete overhaul of the entire model.

Let’s shift our focus to more practical matters.

Our ML (Machine Learning) application consists of two essential components:

- The back-end, which is written in Node.js. This section is responsible for training the model and storing the trained results.

- The front-end, where we perform IQ recognition, utilizing a pre-existing trained model.

By using these two interconnected parts, our ML app efficiently handles the process of training the model in the back-end and applying it to tasks in the front-end, ensuring optimal functionality and results.

ML app architecture: Back-end Training and Front-end Inference

The current version of the app utilizes the simplest two-dimensional convolutional neural network (CNN). However, in such applications, CNNs can potentially be replaced by transformers without significantly compromising quality. This allows us to experiment with updating the architecture of the neural network.

Currently, our model is capable of determining the type of modulation; in the future, we plan to update both the model and the dataset to enable the identification of specific protocols.

How do we gather this dataset?

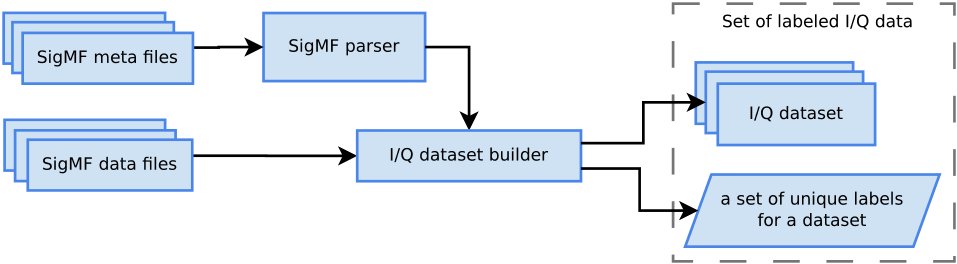

First, we collect IQ sequences in the Signal Metadata Format (SigMF), a new but already well adopted format in the wireless community. SigMF consists of a data file containing the collected samples and a corresponding meta-data file in JSON format, which includes general information about the samples as well as annotations for notable data segments.

The current data processing pipeline involves the following steps:

- The input is a directory containing files gets passed to the parser.

- The parser reads data fragments in the annotation section of the sigmf-meta markup file, considering only those with non-empty descriptions.

- The description serves as the basis for determining dataset labels, specifically the modulation type in our case.

Later on, we plan to optimize this pipeline by employing Tensorflow’s methods for loading, pre-processing, and batching data.

Dataset Preparation Pipeline

The preparation input data for the ML model involves these steps:

- Converting the I/Q data to float32

- Slicing the converted data into sample chunks

- Constructing a 2D tensor from each chunk, with I-values along the X coordinate and Q-values along the Y coordinate

- Convolving the resulting matrix into a 2D tensor.

From Signal to IQ Constellation: Preparing input for the CNN

In summary:

- We opted for Node.js as the language for describing our model and dataset to avoid additional costs that using Python might incur. Node.js has proven well-suited to the task, as it provides access to key libraries, including TensorFlow.

- There are numerous future development opportunities. In the short term, we plan to update the dataset and expand the recognition to include more modulation types.

- It is essential to acknowledge that applications using machine learning require substantial resources, such as disk space and CPU. Using uSDR along with the WSDR platform gives users the advantage of utilizing these resources efficiently.

Please back the uSDR campaign and tell your friends to too!